There has been an ongoing debate among audiophiles about the relative merits of analog and digital recording. Analog proponents will tell you it has a “warmer” sound, and that it is distinctive. They will say a discerning ear can tell the difference between an analog and digital recording. Digital fans say once you get above a certain digital resolution, the two are indistinguishable.

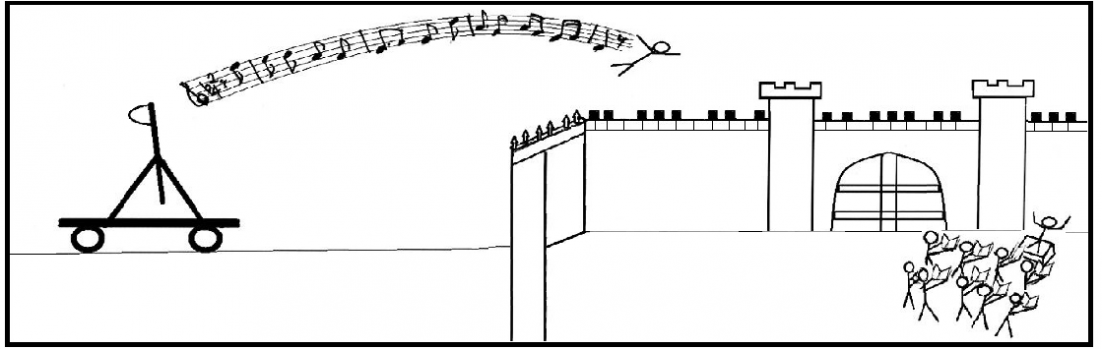

So what’s all this about? Let me explain.

An analog recording captures the sound waves (air pressure waves) produced by performers by turning them into electrical signals, and in the recording process these signals cause changes to be made in the groove of a vinyl record or the magnetic field of a tape. It is a direct and continuous representation of the sound.

When the medium is played back, the continuous fluctuations that occur as the needle moves in the groove of a record, or the fluctuations in the magnetic field are detected, are turned back into vibrations that are then amplified, reproducing the original sound.

When a digital recording is made, we start with the same sound waves, turned into electrical signals. But these signals then pass through an analog-to-digital converter and are turned into a series of ones and zeroes.

It is how these numbers are generated that determines how good the digital recording sounds. Let’s represent an analog signal as a slope.

How do you describe that slope as a series of numbers? First, you have to describe how high that slope is from a zero point (your vertical measurement). Suppose the units you’re using to measure with are big and clunky—you’ll get only an approximate measurement. Like measuring with a ruler that only has inches (or centimeters, for the metrically-inclined), but no fractions thereof.

If you use finer units, you can get a closer fit to the curve, and your measurement will be more accurate.

For digital music, this corresponds to the bit rate. A bit is the smallest digital unit, consisting of a zero or a one; a kilobit is 1000 of these. So at 64 kilobits per second (kbps), something you might see when you’re looking at the information for an mp3 file, you’re recording 64,000 ones or zeroes for each second of audio. Sounds like a lot! But if you record (or rip—that is, convert a CD, for example, to a digital file) a song at 64 kbps it’s going to sound, well, bad. If you can record at 320 kilobits per second, that’s going to sound pretty good. A CD is typically recorded at 1411 kbps.

But let’s get back to the curve describing the sound. The curve isn’t just one point—it’s a vast number of points over time, indicated here by the horizontal axis. So now you have to measure along the horizontal axis. The more closely spaced your measurements are, the more the representation will look like the real curve. This is the sampling rate. The ruler analogy works here too. The finer your measurements, the better you’re going to reproduce the curve.

A CD uses a sampling rate of 44,100 samples per second. That means a measurement (as described above) is taken 44,100 times per second. As you can imagine, you can map a curve pretty carefully if your measured points are that close together. A studio master may be recorded at a sampling rate of 96,000 samples per second.

If you start delving into audiophile sites, however, you’re going to find another term that’s important to know—bit depth. You’ll see phrases like “16-bit recording, or “24-bit recording” touted as premium audio. And you might think, “hey, wait a minute! That’s a low number—we were talking about 1411 kbps, how can 24 bits be good?”

Good question. That’s because bit rate (like 320 kbps) tells you the total number of bits that are being recorded per second. Bit depth tells you the number of bits that are recorded per sample. And you’re taking a lot of samples per second if you want good sound.

It’s another form of measurement. If you go back to our ruler analogy, the more finely you can measure something, the more accurate it’s going to be. So if you can take a measurement that has 24 bits in it, it’s more detailed than a measurement with 16 bits in it.

And now for some magic! Let’s think about that CD. It is recorded taking 44,100 samples per second. A standard bit depth for a CD might be 16 bits per sample. And you have to measure two channels to get stereo sound. Let’s multiply those. With me so far?

![]()

Wait, where have we seen that number before? It’s the kilobits per second (kbps) number for a CD!

1,411,200/1000 (1000 bits in a kilobit) = 1411 kbps (rounded off).

Ta-da! Magic! Ok, it’s math, but to me it looks like magic.

Sampling rate x bit depth (plus a multiplier for stereo channels if needed) = bit rate.

(If you’re wondering where the 44,100 came from, it has to do with the highest frequency humans can hear (ideally, around 22,000 Hz), doubled, and the Nyquist theorem…and this post is already too long).

So let’s sum this all up.

When you convert from analog to digital, you place limits on how fine your measurements are, and how often you take them. The finer and more frequently you measure, the more numbers you generate to describe a specific section of the curve. But all these numbers take up space. So computer files full of numbers for high-quality digital representation are bigger—but it’s a closer representation of that curve. If you use really fine units and measure a lot of times, you are going to get pretty close to the shape of that curve, meaning, when it is played, it’s going to sound very much like that original curve.

But it will never be exactly the same. It can’t be. Analog is continuous. Because each digital measurement is a finite distance apart, you miss a tiny part of the curve.

So you should be able to tell them apart, right?

The truth is that the human ear has limitations. Dogs hear things that we can’t. With age, our range of hearing becomes smaller, independent of any external assaults on our hearing.

There comes a point at which the digital representation of the curve of an analog signal is “close enough” that a human ear cannot discern the difference.

The location of that point is the center of the entire debate. Add to this the notorious subjectivity and individuality of human experience, and you will never make a final determination of that point.

So what do you do? You can conduct your own tests when you’re digitizing analog music, or ripping a CD to mp3 (try selecting different kbps values), or even if you’re planning on using a streaming service (different streaming services provide different recording quality levels). Find out where the “vanishing point” is for you, or what sounds “good enough.”

Another consideration is storage space. High-quality files take up a lot of room. Determine how much space you can dedicate to your digital collection. As external hard drives become larger (and by larger I mean capacity, not physical size—there are 128 GB micro-SD cards the size of your fingernail) and less expensive, storage space becomes less of an issue, unless you have a gargantuan collection of music.

Weighing these, you should be able to find the right audio representation for your purposes.

And if the going gets tough, or your head starts to spin from all the numbers, I have the perfect answer:

Sit back and put on a record (or a CD).

References